And yet if you look at the numbers—team size, number of CRO experts employed and volume of A/B-Tests per year—you can’t help but conclude that there are only a few brands that are consistently growing their experimentation teams, effort and outcomes.

Within our 7-member DDMA Experimentation Committee, we’ve all worked with brands where experimentation thrived for a while, then suddenly slowed down—or bounced back; ABN AMRO, Essent, KPN, Vodafone, Tui, Ayvens, Adidas, Tommy Hilfiger, Philips, NS, Bijenkorf to name a few).

The reasons for this ‘tidal’ pattern in experimentation vary. In some cases: new management, mergers, acquisitions, rebranding. In others, it was more IT-related: re-platforming, large scale IT projects that ‘broke’ the experimentation tooling. This has happened more than you would think in our combined 60 years of experience; at least two cases where the tooling was broken for six months to more than a year —both in companies that had just decided to ‘double down’ on experimentation.

This curious ’tidal’ phenomenon sparked the questions behind this article: What creates these waves in Experimentation efforts—and how can you keep surfing steadily upstream, steadily building a thriving, compounding experimentation program year after year?

In this article, we hope to answer these questions and share the hard enablers needed to move from a “nice to have” to a fundamental cornerstone of the organization—regardless of leadership changes, IT projects, or reorganizations?

The ‘soft’ enablers of an Experimentation Culture

Many articles about boosting experimentation focus on the ‘soft‘ side of building an experimentation culture Rightfully so. Typical soft culture tactics that an experimentation team, Centre of Expertise, or dedicated squad might implement include:

- Sending a regular internal ‘Which Test Won’ email

- Maintaining a test results database

- Celebrating wins

- Failure Friday Meetups

- Having a Experiment Blueprint Guide (defining the process) & Education for new staff

- Virtual and Live Expert Meetups

- Cross-team idea fertilization

- Internal Experimentation Awards or ‘Experimentation Hackathons’

- A spot in the company presentation where ‘everyone’ is present

- Posters in the lunch restaurant

- Messages on the intranet.

- Etc.

This list is long and proven. Most teams passionate about spreading a culture of experimentation have used a combination of these ‘soft culture carriers’. They help draw attention to our craft and the value of experimentation for the organization.

But the real question is, do these effects last?

In our experience, even if you are nailing it on the ‘soft culture’ part, lasting and exponential impact only happens when the ‘hard’ culture change is in place too. In fact, the absence of a ‘hard’ Experimentation Culture might even explain the tidal waves in experimentation efforts, we so often observe in many companies.

How Experimentation Culture and outcomes fluctuate like spring tides

Building an ‘Experimentation Culture’ sounds like a very hard thing to do. And to be fair, it is! Even companies that now have Experimentation in their very DNA, took years to get there.

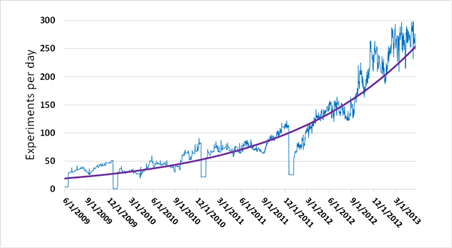

Take a company like Microsoft, which was running less than 250 experiments a month until they accidentally’ found a single A/B-test that brought them “tens of millions” extra revenue per year [2]. By 2013 Microsoft had scaled to over than 90.000 tests a year-roughly 250 tests a day. A fifteen-fold increase in just four years (see the image below).

Although even at Microsoft the growth wasn’t steady. You can see three quarters in the graph below where experimentation pulled back significantly. There is probably a story behind that.

The same exponential, ‘waving’ pattern can be found at Booking.com. It took them 20 years to get to the current level of over 30.000 experiments a year.

In both cases, we see it: experimentation doesn’t just grow—it pulses. It comes in waves, surges upward, then sometimes recedes. Understanding what drives those tides is key to sustaining long-term impact.

[2] Large Scale Experimentation at Bing | Search Quality Insights Dr. Ronny Kohavi, 2013

The Flywheel Model: How to Continuously Optimise Your Experimentation Program

One of the great things about the experimentation community is its openness. A testimony of this is that companies like Microsoft, Booking.com and Outreach have shared an article about how they got there. In their article ‘It takes a flywheel to fly ”, written by their own experimentation people, the right path sounds very clear. They even lay out a five-step process – their so-called ‘flywheel’ for scaling experimentation:

- Lowering Human Cost: Streamlining the A/B testing process to reduce the investment

- Running More A/B Tests: Increasing the number of tests with each iteration to support decision-making.

- Measuring Value: Capturing the impact and value added by A/B tests, especially focusing on counterintuitive results.

- Increasing Interest: Sharing results broadly to generate interest in A/B testing across different teams.

- Investing in Infrastructure: Improving A/B testing capabilities and data quality to make tests more trustworthy and easier to execute.

Is something missing?

Our experience is that even at companies where Experimentation has shown a ‘Tidal’ rather than an ‘Upward’ trend, these steps have been put into practice. Testing usually starts as a pilot project, a pioneering team or even pioneering person proofs the value of experimentation with a couple of tests. Sharing results, calculating ROI per test and for the program as a whole. Experimentation gets management attention, following which investments in tooling, people and skills are being made. So far so good.

But then after a period of growth in Experimentation Culture and ROI, a couple of years down the line A/B-Testing and Fact-based Decisioning is suddenly in worse shape than before. So the question arises: is there something missing from the Flywheel Model? Is there something which is so natural to the people at Booking.com and Microsoft that they forgot to put it in the model? To make it explicit. And does that certain something at times ‘go missing’ in companies that have the tidal pattern?

The 5 Hard Drivers of an Exponential Experimentation Program

With the ‘Hard Drivers’ of an experimentation culture we mean the things you need to do before experimenting becomes ‘standard procedure forever’. We feel these ‘Hard Drivers’ are missing or understated in the Flywheel model. The checklist below helps you to assess if the ‘hard aspects’ of Experimentation are covered. Use this list to ‘Unstuck’ Experimentation from that mode or avoid it all together;

- Testing is in the ‘Definition of Done’ of Product (journey) Owners & Customer-facing teams

The ‘definition of done’ outlines the criteria that must be met before a product owner can consider a feature, journey or page done. If Experimentation isn’t part of that definition, it becomes all too tempting for product owners to prioritize ‘velocity’, releasing features quickly and calling them a success.

What happens then is that they ship as many features as possible, ignoring the quality, sales and CX impact of those features. The classic red flag: ‘Really interesting these Experimentation cases you’re presenting, but we are too busy to test.’ That sentence is a blood red flag immediately telling you: this is a feature factory!

So how do you fix it? Find the Product Owner who actually does care about delivering value – someone motivated by outcomes and not the number of new features delivered. Work with their team to build a strong case, present it to management and advocate for better KPI’s (and proper measurement) across all product teams.

This approach is called ‘A Coalition of the Willing’. It is much more fun and effective to work with them then trying to win over the ‘Coalition of the Wining.’ Mind the spelling: Wining, Not Willing! - Testing as a KPI for Managers and teams

Similar to product owners: if managers don’t have KPIs related to testing—or if they have too many KPIs and are allowed to trade one performance metric for the other—there will be no real adoption of testing.

Your Experimentation Culture will never thrive if you do not consistently run a growing number of actual experiments. These tests aren’t just the visible side of your soft culture-building—they’re also the hard data behind your business cases and long-term impact. So boosting the number of tests is not ‘optional’ and should be a constant point of attention, whatever changes you are working on.

Of course, only a high number of tests is not good enough. They have to be done properly according to a set Experimentation process or blueprint. - Are there any dedicated A/B-Testers and Web Analysts?

It is hard to build a serious testing culture with only generic digital specialists.

In many companies, we still see teams without dedicated Web Analysts (& App) or Conversion Specialists. That’s like expecting UX or design to be done by generalists. Same goes for tax compliance or IT security. Put simply: if you don’t invest in dedicated A/B-Testers or Web Analysts, you’re actually saying: we do not take Experimentation seriously. - Are AB-Tests in the company calendar?

Not listed, means ‘not important’.

If tests are not on the company (marketing) calendar, teams will inevitably schedule product releases or campaigns right in the middle of carefully crafted and planned tests. - Is testing part of onboarding & training?

If you do not make it clear from the get-go that testing is expected and needed, it is clearly not a priority.

New joiners are your best chance to reinforce cultural change and ways of working. If they’re not educated about the desired way of working the ‘old’ culture will prevail, and you’ll miss that opportunity.

Now that we’ve defined our top 5 hard drivers of experimentation culture, it’s time to bring them together.

Use the table below to map out the perfect mix. Make sure to pick a few from each column. Then, form a Coalition of the Willing and/or find an ambassador high in the organisation, who can share your results and asking everyone question like: Did you test that? This you evaluate that campaign End-2 End? Did you quantify the impact of that feature in terms of CX and business value?

Checklist of Experimentation Enablers

| Soft enablers of Experimentation | Hard enablers of Experimentation |

|---|---|

| ‘Which Test Won’ email and a Test Results Database’ | Get testing in the ‘definition of Done of product or journey owners & customer facing teams |

| Virtual and live meetups exchanging knowledge, tips, best practices and inspiration | Managers/teams get a KPI on Testing & is mentioned in quarterly report. (public) |

| Get Mastery Training for Team & make sure there is a process including pre-flight check, check on multiple devices & meta-analysis of results | How many dedicated A/B-Testers and Web Analysts are employed? |

| ‘Spot in the company presentation’ & have an Internal experimentation awards or ‘hackathons’, with the c-suite as jury | Get AB-Tests in the company calendar. |

| Participate in Testing Awards contest | Testing part of onboarding & training |

| Helping teams create test roadmaps, Help teams run tests | |

| Improve funnels in workshops proofing sequences of small incremental improvements can have long term impact. |

Keep in mind: Sometimes it doesn’t matter if an enabler is soft or hard. Even if you have all the hard enablers in place if you don’t get management to ask ‘did you test that’ at any presentation, investment request it will become less important. This is what the saying” Culture has strategy for breakfast” means..

Culture Change isn’t Instant: Start slicing the C.H.A.N.G.E. model

Overnight culture change is a pipe dream. It simply doesn’t happen. So instead of trying to eat the whole ‘Culture Elephant’, you need a way to slice it up. In order to do this you need to treat culture change as an Agile project: Build – Measure – Learn.

To help with this we’ve mapped out a simple five-step framework that spells C.H.A.N.G.E.

- C – Coalition: Find a team that is willing to run experiments – a coalition of the willing. Don’t waste energy trying to convince teams that aren’t ready. Start where there’s curiosity or motivation. Prove it works there, then scale. Once stakeholders see the results, intrinsic motivation becomes less important. Experimentation becomes expected. It becomes a KPI.

- H – High Impact: If you only have a few shots to show the value of experimentation—make them count. Don’t waste time on a button color test. Choose experiments that directly support key goals of a journey, page, or app. Think: What would genuinely improve the user experience or business outcome here? Then test that.

- A – Affordable: In the early stages, experimentation should be the opposite of large, high-stakes projects. Use research and analysis to find low-effort, high-impact opportunities. Keep it lean, so you have a couple of shots to run several tests—even if a few fail. Use an Effort–Gain matrix to prioritize: in the beginning, go for low effort, high gain. Save your time and credibility for meaningful wins.

- N – Notable: Think like a PR manager. Every company has its current obsessions: a hot KPI, a product in the spotlight, a strategic segment a ‘thing of the hour’. If you can improve something that’s already drawing attention, your impact will be much more visible. People talk. And people notice.

- G – Growth: Look for tests with repeatable impact. It will bring you much further than a ‘one off’. If you find a winning variation that improves multiple landing pages, app flows, or product features—you’re not just improving one area, you’re enabling scalable growth. For example: optimizing a landing page template used across 20 product pages. If the same uplift appears in three or four versions, you’ve uncovered a multiplier.

- E – Exposure: Success needs storytelling. If you don’t share your wins, they won’t stick. Make sure your team spreads the word. And encourage others who’ve experienced the value of experimentation to become ambassadors.

Final note: Listening is the First Step toward Influence

Getting your Experimentation program noticed–and securing buy-in for the hard’ side of building a strong Experimentation culture– is not just about communication, internal networking and ‘sending’ impressive results. Broadcasting results is not enough; chances are you won’t be heard or won’t be taken seriously. Why? Simple: if you want people on board you have to understand them first. So next time you’re planning your test roadmap and pitch you experiments, try to answer these questions first:

- What is important to them, for their team or department?

- Are they familiar with Experimentation?

- What excites them? Wat concerns or objections might they have?

- What do they think of you and your team?

- What, in their view, would triple their success?

- How can you help them achieve their goals?

- Who else in their network might benefit from Experimentation?

Culture has Strategy for Breakfast, but What is for Lunch?

That classic phrase—culture has strategy for breakfast— means this: you can plan and design the smartest business strategy imaginable, but if culture isn’t aligned, it won’t get off the ground.

The same goes for your Experimentation program. You can make a plan that is well researched and thought through, including trend analysis, business cases and scenarios. Even if your program gets a lot of attention, goodwill and culture surrounding your experimentation platform, it doesn’t really take off if the hard enablers are not in place.

So please use our checklist and let us know how it worked out for you. And if you feel you’ve made a dent in the Culture. Everything checked off? That make sure to enter your cases for Experimentation Heroes 20205. Shifting culture isn’t just an achievement—It’s worth celebrating.

Ook interessant

Odido, ANWB en PostNL winnen DDMA Experimentation Heroes 2025

The Changing Role of the AI-Enhanced CRO Specialist